The Forgotten Century

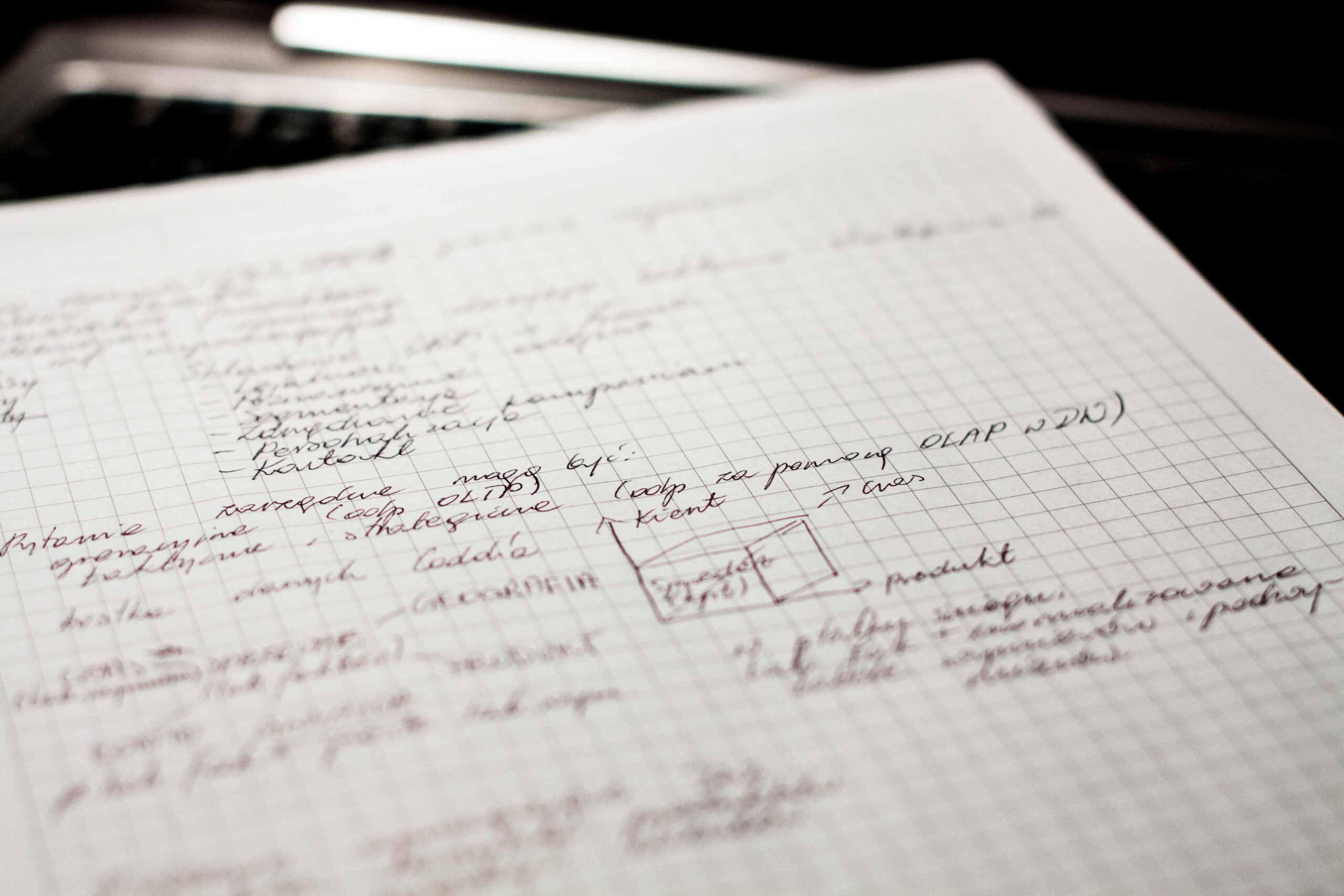

Professor Nimmi Rangaswamy's thesis that was stored in a floppy disk can't be accessed now.

The absence of time travel means that we as humans are incapable of experiencing events before our time. Directly, anyway. Our knowledge of the past is heavily dependent on physical records of events and daily occurrences – in pictorial form, by physical evidence left behind, and most often by means of writing. Any hindrance to access or recognition of such records means loss of our history. Information before (or in) our time that we have no memory of is, to say the least, a bit of a problem. History needs to be conveyed to the future. Written down.

And with the advent of rapid advances in modern technology and constant recording of every form of information imaginable, we are paradoxically in a time where future historians are more likely to know more about the start of the twentieth century than they will about the twenty-first. But how?

The Technical Problem

“We are nonchalantly throwing all of our data into what could become an information black hole without realizing it. We digitise things because we think we will preserve them, but what we don’t understand is that unless we take other steps, those digital versions may not be any better, and may even be worse, than the artifacts that we digitised”

Vint Cerf

The father of the Internet

The key issue here is not with the journalling of information in the first place as was the case in prehistoric times. It’s with (future) access to this data. If all the information in the world could be saved in permanent, non-degrading data stores but future generations were unable to decipher what it meant, then it is as good as lost. At a basic, technology independent level this loss is already exhibited – interpretation issues as with language of the Indus civilisation (i.e, artefacts we do have are undecipherable), and information loss by destruction of medium, such as writings on papyrus and parchment that no longer exist.

But with digital data, the situation is exaggerated – primarily for two reasons. The matter of the sheer volume of information coded into a digital form making losses that much more obvious is significant (for reference, we have till date generated about 16 Zettabytes [ZB] of data, with an estimate for 163ZB by the year 2025) but the second, more crucial factor, lies in the nascency of digital media combined with the multi-level tech stack needed to make sense of the same.

Digital data is varied. While in essence just strings of zeros and ones, the information has to be encoded in a specific format at several levels of the system. There’s decisions at the hardware level, error correcting codes, general compression algorithms, software specific filetypes, filetype encoding formats, and so much more that’s often unaccounted for. All of these levels of information encoding and ways to interpret it need to be known by the interpreting system (both the software and the hardware) in order to make sense of strings of (what would be otherwise) gibberish. This is not in itself a problem. It is a solution to other issues with software development and information theory. But it does become one when the stack is unstable. And the technological stack we use is. There’s obsolete hardware (like floppy disks) no longer in production, and often hard to find or replicate. File formats, especially proprietary ones with no documentation and custom interpretation software are known to effectually “lock” all their contained data when the software goes off the market and eventually most systems (or its usage license expires). NASA has lost data from some of its earliest missions to the moon because the machines used to read the tapes were scrapped and cannot be rebuilt1. Laserdisc doesn’t exist anymore.

In 1086 William the Conqueror completed a comprehensive survey of England and Wales. “The Domesday Book”, as it came to be called, contained details of 13,418 places and 112 boroughs—and is still available for public inspection at the National Archives in London. Not so the original version of a new survey that was commissioned for the 900th anniversary of “The Domesday Book”. It was recorded on special 12-inch laser discs. Their format is now obsolete.

The Economist

April 28, 2012

Admittedly, it’s not an immediately obvious problem at first sight. A lot of what we have today “just works”. Most major files use standard formats interpretable by a majority of systems, hardware seems to work just fine with the overlaying software (unless it’s Ubuntu and Nvidia GPUs), JPEG loss and other issues associated with lossy file formats are largely memes outside of the enthusiast community. For a large majority of current systems we work with today, everything looks fine and very functional.

The gaps start to show on looking at it historically. Like the example with floppy disks. It certainly is still possible to get hardware that will run and read the files off the disk, but it will require at least the existence of old hardware, and if not that a blueprint to reconstruct the hardware from. Once that’s done, maybe an obscure interpreter is required to make sense of some obsolete word processor output file. But it can be done. For another example try opening a word document made in Office 365 (but not with compatibility mode) in Word 98. The list can go on. VHS. Old console games made for the Amiga. Trying to play a PS3 game on your PS4.

This is still a solvable problem, given enough time and effort into finding the right interpreting system (or building one). Complications arise when there exists none such – and building one is infeasible for whatever closed source reason. Considering this you might wonder how exactly such an unthinkable event could possibly happen. So imagine this situation. A computer system was developed in the 1960s using Integrated Circuits for the first time, with poor documentation (nothing out of place as most of us are well aware of) of system architecture and nothing resembling modern operating systems, and custom data formats custom developed for the job. Data was collected using the system and for it, and then archived in magnetic tapes. Then, in a couple of years or five, the computers were thrashed. Repurposed. No documentation of the working system existing (WHY? I hear you ask. Because people are stupid). In say fifteen more years you want to read the data again.

But how?

In this context consider the future with our current systems, is the possibility of data loss by such means really such a far-fetched concept?

Summarising the technical problem in a systematic way, we look at the ways in which it can manifest itself:

- Media or Hardware obsolescence: Lack of hardware to read data formats, such as the NASA tapes, the 12 inch laserdiscs containing The Doomsday Book, or VHS tapes in the future. This is particularly a problem for any removable media that are theoretically readable only as long as a suitable hardware reader is found.

- Software and format obsolescence: Lack of software to read available digital formats.

- Proprietary file formats are very susceptible to this. Considering how many such are poorly documented and closed off to inspection, in the event of software fault and/or the group behind it ceasing to exist all the data contained within is unreadable. The seemingly everlasting argument of VHS vs DVD is now history.

- This is also an issue with data protected under DRM. This gets tricky because the question of ownership becomes a factor to consider here; a matter for a separate write-up and a few legal battles in its own.

- The Legal and Regulatory aspect of it. A bulk of critical data generated across the world data is access and use protected, not only in the case of official government secret acts but also with copyrighted material like books, software, and the like. As The Economist puts it, “Although technical problems can usually be solved, regulatory obstacles are harder to overcome”.

The Impact on History

And by extension, on Society and Culture

“If we’re not careful, we will know more about the beginning of the 20th century than the beginning of the 21st century”

Adam Farquhar

British Library’s digital-preservation efforts in-charg

Why do we need history?

From the given discussion so far, it is obvious how unchecked information loss can affect our records of the present for the future, affecting record of history. But why is that a such a crucial problem, one that deserves separate mention?

This section looks at just the impact of history, having cleared the fact of loss earlier.

Loss of Cultural History in larger society

India has a rich history which has been passed on to us for generations over generations for the past 2000 years when it was finally written down formally into books. All that history is recorded and has been relatively well preserved over time. Not necessarily the case with today. Imagine, ten years from now, the information about this article may be lost just because it wasn’t backed up. Maybe the printed version will survive a few more years before it gets decomposed in the municipal dump. History that is being created everyday is getting lost. And it is not just the trivial bits but even the (relatively) more important ones. Non-print fringe newspapers, relying on solely web releases might disappear without a trace if the domain name expires and the web archive hadn’t stored a snapshot. Hobbyist forums, dedicated channels – not obviously useful to all but relevant nonetheless, every loss is a missing puzzle piece in the larger picture. An argument can be made that data important to us is preserved, as in the past; and for response I redirect you to the case of NASA and the tapes.

Such loss of history is a negative. Without information on one’s past, there is no culture, no sense of belonging or recognition with the past. Achievements are held in individual glory with little regard for an overall contribution to a larger “whole”, and there is a sort of disconnect between the individual and the society they reside in. Moreover, with no record of past history people stop expecting preservation of their present, so contribution is seen as futile; so why should people bother? For a working demonstration of this situation, look no further than IIIT itself. Although this is more a case of no information being recorded than one where information is lost, it has by large a similar impact. As a quote from an earlier article in Ping! explains,

“When you know that what you are doing won’t leave a legacy, you aren’t as enthusiastic anymore. It’s selfish. It’s human. The sense of change for worse or better, has always been the missing ingredient. A sense of apathy creeps in, when there is no sense of legacy, where there is no urgency. This college-wide disinterest is the manifestation of an Orwellian dystopia; a culture without its own history, a culture without an identity. Where in the Orwellian world, history was perpetually rewritten, here, it’s lost for never having been written in the first place.”2

Anurag Ghosh, “Memento

Ping!, Monsoon 2016

We couldn’t find anyone better. Again

The effect on Personal History

Personal records are particularly vulnerable to such loss. Physical record of personal history – photo albums, certificates, and other such assorted items – aren’t invulnerable to physical decay or being misplaced, but with them people are well aware of possible factors affecting their shelf life and take measures to preserve the ones they hold dear. With digital data, this is often not the case. Unlike major IT-core corporations that (hopefully) make conscious efforts to ensure business critical data is accessible over time (more on this later), the average user treats the digital land as it were a physical locker, saving photos to their local hard disk with little regard for forwards compatibility; if the photos they took were in a proprietary RAW format that will lose support in another year they would conveniently ignore the folder until a decade later when they need to show their friends that they did really go to the London Eye but now there’s no compatible player to show the photos.

One could say the (personal storage) situation is improving, as more and more people opt for cloud based services to store their images. This definitely seems to be more sustainable, as such cloud services are expected to maintain compatibility for all their data to avoid on losing their customer base. This is not necessarily the case. If the data is stored on the server of some no-name company with a poorly defined data exit policy, it’s pretty much as good as gone3. And even with “reliable” providers, the service is almost always proprietary with questionable legal restrictions; consider the (rather ironic) example of when Amazon removed all copies of the book “1984” from the Kindles of customers who had seemingly bought the book4. It is unlikely such extremes would be seen on a regular basis, but over time it’s not altogether an unlikely event.

Measures we can take

There is no be-all end-all solution to the problem.

The technological world is nascent, ever changing. As long as the situation stays the way it is, the stack of requirements for information access shall remain temporal. But measures can be taken to minimize the impact, potentially maintain enough till permanent solutions can be found.

There are different ways to tackle this issue all the way from personal to industrial. On a personal level, one may keep a backup of all their personal files on the latest available stack which is least bound to get replaced in the near future. From this if we consider that using a popular format to store a particular bit of data is effective, we should also consider the possibility of some formats just dying out. And in this case what should be given preference? Data that can be losslessly encoded in a given format, or the popularity of some other format that is more lossy? For example FLAC vs MP3 files to encode audio. FLAC stores more information losslessly, but lossy MP3 is more popular; and if (hypothetically) FLAC dies out before MP3 than the “lossy” format is effectively more lossless, isn’t it?

Another important step that can be taken is to use open file formats; and open specification systems in general. In such case, even if the hardware is wiped completely off the face of the earth, it is theoretically possible to reconstruct a reader. Not the best “cure” to data incompatibility per se, but you know what they say about prevention.

This article is part of the column ‘Eye To The Future’ that is dedicated to long form articles based on contemporary and future impact of technology and scientific impact on society.

Call for pitches: Interested in contributing to this column? Shoot an email to [email protected] with your pitch for the column.

- “Bit rot – Digital data – The Economist.” 28 Apr. 2012, https://www.economist.com/leaders/2012/04/28/bit-rot. Accessed 30 Oct. 2018. ↩︎

- “Memento – PING!.” https://pingiiit.org/memento/. Accessed 3 Nov. 2018. ↩︎

- “The hug heard around the company. : talesfromtechsupport – Reddit.” 4 Apr. 2017, https://www.reddit.com/r/talesfromtechsupport/comments/63frsn/the_hug_heard_around_the_company/. Accessed 3 Nov. 2018. ↩︎

- “Amazon Erases Orwell Books From Kindle Devices – The New York ….” 17 Jul. 2009, https://www.nytimes.com/2009/07/18/technology/companies/18amazon.html. Accessed 3 Nov. 2018. ↩︎

Autonomous Cars: A Developing Challenge

Autonomous Cars: A Developing Challenge  A Twisted Way to Learn

A Twisted Way to Learn  Affording Unemployment for All

Affording Unemployment for All  Beyond the Black Box: IIIT’s New Chapter with Professor Sandeep Shukla

Beyond the Black Box: IIIT’s New Chapter with Professor Sandeep Shukla  The Great Coupling

The Great Coupling  PJN – Professor and No-Longer-Director

PJN – Professor and No-Longer-Director  The Lazy Third Eye: 2024-25 Annual Report edition

The Lazy Third Eye: 2024-25 Annual Report edition