A Twisted Way to Learn

“Twitter has turned everyone into a reporter,” as Modiji observed at the Silicon Valley Digital India and Digital Technology dinner in 2015, he was merely exemplifying an inescapable thread that ties technological progress and radical social transformations we are going through. While Modiji had made the point in context of increasing visibility and strength to the voice of the common man, a commendable point really, one but can’t help notice the problems associated by the cultivation of such a culture.

As Steve Tobak, SV consultant and high-tech senior executive, opines about news posts on your Facebook feed (and this holds pretty much for the Twitter feed too), “How much of it is what you and I would consider journalism? That depends on who and what you follow. For some, much of it is legit. For others, very little is genuine news. And for most, it’s like trying to get a drink from a firehose that alternates fresh and dirty water. You never know what you’re going to get.”

For Machine Learning (ML) enthusiasts among us, fake news then seems like the new problem to tackle, and I can only guess, at least some of us must already be racking their heads to think of the best discriminative model (i.e. a model which can differentiate between types of entities).

However there are situations in life when we might be facing a hydra, ala “Cut off a limb, and two more shall take its place!” and not even realize it, and this could be one of those. To scale the automatic generation of personalized news feed to millions of users, most social networking companies turned to ML techniques. Their attempts were closely linked to the desire of maximizing user engagement (and thus, their shareholder values). As other metrics weren’t accounted for, eventually it resulted, as we can finally observe today, in the proliferation of fake news and the creation of echo chambers. Posts expected to maximize your engagement (i.e. “relevant” to you, in corporate speak) would be on your “news” feed, regardless of their veracity, unlike the more traditional forms of journalism which had a more nuanced curation and vetting procedure.

Even if no algorithm takes our hand and makes us click on that clickbaity link, it has created a behavior model tailormade to know what we are susceptible to and thus, has learned the “right fix” for our minds. It inadvertently influences us more than what we bargained for.

The question that one must ask is whether the objective function is correct? Profit is one thing, but optimizing for just profitable metrics may result in a lot of unintended consequences. But it’s harder to quantify what is a “more correct” objective, especially as we start using these techniques in production environments where they make a huge impact.

ML techniques, comically, don’t seem a lot different from a robot who has placed everyone’s brain (with advanced life support, of course) in a vat full of endorphins, dopamine and serotonin. The robot just wanted to make everyone “happy”.

| What’s with all the terminology ? – ML in a nutshell

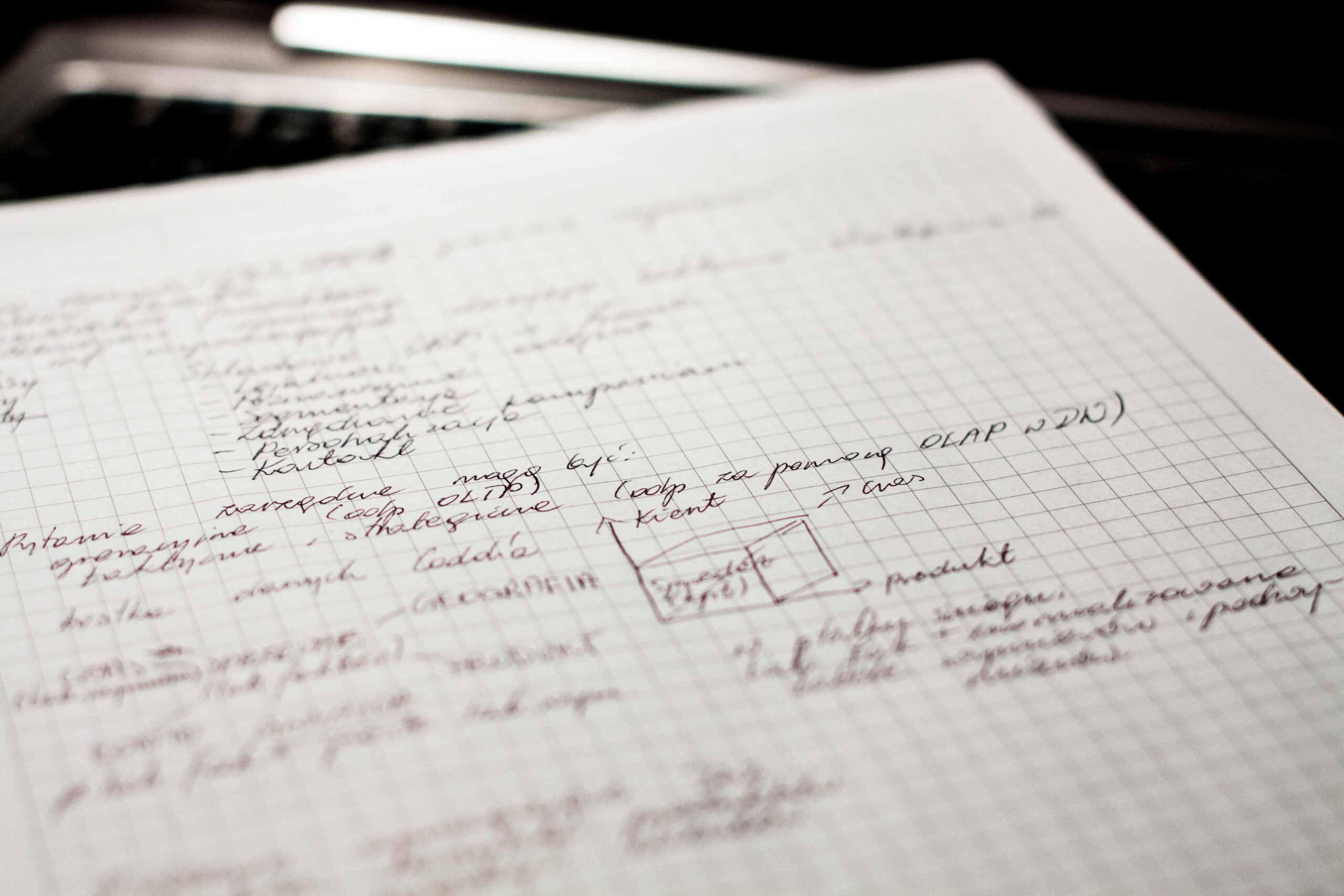

Machine Learning (ML) techniques are statistical tools involved in automated learning of patterns and inferences from semi-structured (say labelled images, or images with bounding boxes of a person) or unstructured data (like a random collection of articles). Computational models comprising of one or more of these techniques, when fed with data “learn” a designed model involving parameters/variables and these can in turn be used for tasks like classification, prediction and ranking among others. A common way of thinking about ML techniques is to say that the aim is learn a target function by making inferences based on a given set of data points. Further, It is often useful to formulate ML techniques as “Optimization Problems” wherein completing a task can be thought of as optimizing (maximizing/minimizing) some “objective” (mathematically representing what we wish to do) while satisfying certain “constraints” (notions that should be taken care of) relevant to the problem in hand. |

Biases: Known and Unknown

Let’s look at another ML problem that has caused uproar. Computer Scientists at Shanghai Jiao Tong University recently investigated the plausibility of inferring likelihood of criminal behaviour from facial features (images are not mugshots). The researchers maintain that the data sets were controlled for race, gender, age, and facial expressions, though. Now, some of us may feel it’s the start of a real life unfolding of Minority Report, the Spielberg movie based on the development of oracles which predict crimes, and the ethical complications that follow. Others, however, discount this research away as scientists playing with fun problems. The truth, probably, lies somewhere between the two extremes.

Some of us may feel repulsed by the use of facial features, based on our moral predispositions, but how different is it really from the other details which are already used to predict likelihood of reoffending? Such solutions have usually been fraught with problems such as latent racism, with the algorithm modelling ethnicity as a hidden variable from the data, and thus influencing decisions of the judiciary and the police.

A simple explanation for a lot of this can be that the data to blame, and the algorithm only learns the biases present in the data. What this really means is that the ML system has picked up on a trend that is specific to the data at hand, but does not really generalize so well. For example, a set of images which only contain coffee mugs in hands of people may cause a model to believe coffee mugs don’t exist without hands.

Extrapolating from that line of thought, if we account for such biases in our data or add constraints to our model so that they are more generalizable, we ought to be good. Adding such constraints is also fraught with issues. We would need to understand the bias and come up with the constraints to remove the bias. However, this thought process fails to account for biases that may not be readily apparent.

Let’s take Word2vec for instance. The Word2Vec scheme simply takes a large corpus of textual data and encodes each word as a vector, i.e a set of numbers (or a point in a n-dimensional space) that can be manipulated and transformed.

This popular word vector embedding for instance, was found to be sexist. Sexism isn’t the first thing that comes to mind when you design word embeddings, isn’t it?

A good example of it’s impact can be thought in search and recommendation systems employing such a technique. A search for “computer programmer resumes” may rank men more highly than women if the phrase “computer programmer” is more closely associated with men. It may not be difficult to account for this particular form of sexism, once identified, as the researchers showed in their work, by learning a vectorspace transformation. However, the point to note is, nowhere in the methodology a procedure was put to test against such criteria. The onus to identify undesirable tendencies and correct for them lied on the user of the embedding.

In fact, one could even argue that not all biases can be known, and corrected for, beforehand. It’s important to realize that some bias will always creep through. How do we quantify and formalize humane notions of a bias, hidden or otherwise? These conundrums lead us to more thought about finding ways to formalize human values and adding them as constraints.

Looking Beyond the Metrics

As we could see from the case of word2vec, the researchers developed a transformation to reduce sexism present in word vector embeddings. Similarly, there has been work on understanding what’s being learned by other Machine Learning models, even for really complex ones like autoencoders. So, yes, there have been some strides in understanding and evaluating what these models are learning.

But still, there is still a long way to go. There has been very less research on the limitations of the ML systems that are being used today. Scientist have developed eyewear honing a pattern which is enough to fool commercial systems into identifying people incorrectly. Also, image classification models can be fooled with 99% accuracy by adding noise which is imperceptible to humans. Now, just imagine a self driving car which is thwarted by an “innocent” pattern (like of a zebra crossing) at a back of another car.

A clear line of research and opportunity thus can be seen in identifying ways through which ML solutions can be evaluated across various dimensions of scrutiny, apart from the ones mentioned in the sections above. Are these systems safe? Are these systems humane? All of these become important problems to tackle in the future.

Learning from Hype

Technology has become a focal point in the development narrative, and will be heavily influenced by systems and solutions utilizing ML. ML is poised to be used heavily in Public Policy, from helping farmers sow seeds to detecting lead poisoning. We are all well aware of the hype behind ML, but it’s application and understanding in social, political and administrative situations is not very well known.

For instance, do we have the right to explanation of a decision, score or recommendation given by an ML algorithm? For ML systems deployed by the government, it directly amounts to transparency of state machinery. But it’s not always possible for ML algorithms to be interpretable, and is sometimes even orthogonal to accuracy. Is there a way ensure transparency in such scenarios, through administrative means? Is it possible to engineer more interpretable systems? A big problem with the proliferation of technology and algorithms is the belief that they seem to be somehow objective in nature. That the answers, or confidence values that we get are thought to be grounded in some sort of “scientific objectivity”. “It’s the computer that is computing the value. It’s can not be wrong!”, is a sentiment held by a people not well acquainted with CS and ML and a particularly susceptible candidate to this fallacy is the government.

ML hype is often being bought by governments in believing that it will solve many of their problems – some related to scale, some to improving efficiency and some to bring social change. Even VC’s aren’t amenable to such hype, given the recent RocketAI prank, where a “troll” startup was unveiled to great pomp by researchers at a conference. The fake startup was worth tens of millions of dollars for a short while as five major VC funds were interested in investing despite their advertised technology having no grounds in research and in real life.

However, the bitter truth seems to be that ML cannot solve all the problems and in fact comes with its own baggage: issues on which governments and businesses should pay attention. It’s important to remember that ML is a tool, not quite unlike a knife, not a panacea as it seems to many.

I, Researcher

As researchers, It is important to not get swayed by the cool ML prototypes and hypes. To merely enlist a few big issues that plague ML based solutions,

- Unknown “correct” objective.

- Known-Hidden biases in data or lack of foresight about the data.

- Lack of broad and real-world evaluation and testing methodologies.

- Incomplete understanding of ethical, political and administrative implications and impact.

In a certain imagination of capitalism, it is said that consumers are said to express their preferences and values by how they choose to spend their money, what is termed as Dollar Voting. Similarly, perhaps, especially as programmers and computer scientists, we need to adopt code voting, ie. let our research and engineering work reflect our preferences and values, at least to the best of our abilities. Some of the first steps towards this are already been taken by some of the leaders and visionaries of AI, who all assembled in Asilomar Conference on Beneficial AI in January 2017, at the initiative of Future of Life Institute, and came up with the Asilomar AI Principles as a broad consensus on some 23 principles that we researchers and entrepreneurs must try imbibe in our efforts.

What we really need today is not mindless application of of algorithms to problems, without any big picture insight into the change we are bringing on to our world. Instead, we need to actively address these issues, and hope that we are able maximize what we truly ought to: humanity.

What did you think of the piece? Write back to anurag.ghosh@research.iiit.ac.in with your thoughts.

I’d like to thank Arpit Merchant and Abhijeet Kumar for their valuable inputs.

This article is part of the column ‘Eye to the Future’ that is dedicated to long form articles based on contemporary and future impact of technology and scientific progress on society. This column is brought with the help and assistance of guest editor Tushant Jha.

Call for pitches: Interested in contributing to this column? Shoot an email to ping@students.iiit.ac.in with your pitch for the column.

AI Art

AI Art  The Forgotten Century

The Forgotten Century  Autonomous Cars: A Developing Challenge

Autonomous Cars: A Developing Challenge  Understanding Privacy – Case in Point: Aadhaar

Understanding Privacy – Case in Point: Aadhaar  Data and IIIT

Data and IIIT  Cleaning up the Mess?

Cleaning up the Mess?  We Are So Cooked

We Are So Cooked  Qu’ils mangent de la grenouille! (Let Them Eat Frogs!)

Qu’ils mangent de la grenouille! (Let Them Eat Frogs!)

1 thought on “A Twisted Way to Learn”

Comments are closed.