Mama, Put My GPTs in the Ground

The irony of the situation is not lost on me. As a student in a research institute, continually exposed to the innards of AI, my own vested interests are in the development of this domain – as it likely is for several, several others among my peers, if not the majority.

But here I am, regardless, trying to make a stand against the use – and over-use – of AI and LLMs in our lives, even as it continues to permeate in every-day life at large. I may be fighting a losing battle, but that shan’t make any of this worthless.

Most of this article shall be concerned with Generative AI and LLMs. However, this is quite simply an effect of my increased familiarity with such models, as opposed to other systems.

I posit that the knowledge of such a system’s inner workings allows for some amount of disillusionment. A level of disillusionment beyond simply prompting ChatGPT to write me a screenplay a few times, and being unsatisfied with the outputs.

LLMs and Academic Integrity

I stare straight ahead at my potential hypocrisy in writing a section like this. It would be safe to assume that all of us here have, at some point, opened up our browsers to our favourite LLM of choice, and outsourced assignments and writing code to this… strange little gizmo that seems to be able to think for us.

Does this get in the way of assessing ourselves? In the traditional sense, yes. But we may still be able to argue against that — “Oh, I just HATE the way ChatGPT writes, so I just use it to get me pointers, I’m still writing my answers on my own.” || “It’s not like GPT code is perfect, I still need to sit with it to debug and fix it.”

We may still be able to convince ourselves that we are learning, in spite of using these platforms. For that matter, most advocates of AI propose it as a tool to be leveraged to facilitate learning.

Underline: facilitate.

Identifying that an LLM is capable of making mistakes – hallucinating, as the term goes in literature – opens up pathways to try and mitigate the chances of this happening. And yet, fundamentally, it remains naive and impractical to assume that this problem can be solved completely.

An LLM is in no way designed to provide factual information. Instead, this is merely a consequence of being trained on large amounts of data, which for the sake of argument, simply contains factual information by some happy accident. The old design paradigm still holds: Garbage In, Garbage Out. Good output is largely the consequence of good training data.

Sheer scale, and (abstracting several architectural details) almost equal probabilities for next token prediction manifest to us as a hallucinated output. And while it may be mitigated, it may likely never be removed altogether.

What that means for us, using GPT outputs for our assignments, is that our attempt to optimize solving one problem has led to us trying to deal with a different problem altogether. This need not be a bad thing in itself, but also warrants some change in the way these are evaluated.

LLMs and Personal Relationships

But maybe you – excuse my bluntness – simply don’t care. What we’ve discussed up until now is work; and there’s more to life than work.

Well, let’s talk about other ways LLMs have entered our daily lives – aside from work.

The good: LLMs are great problem solvers. “How do I stick to a consistent schedule without burning out?” || “How do I start training for a marathon by the end of next year?”

A by-product of the sheer scale of LLM training data is the ability to provide extremely coherent, reasonable-sounding answers to questions like these; this is, of course, thanks to the models being trained on volumes of questions and answers from Internet Forums, including but not limited to Reddit. To an extent, natural language queries to LLMs seem to have overshadowed our old habits of using a search engine, and sifting through the results ourselves.

We may even say that the Internet has become even more accessible since the advent of ChatGPT.

The Bad and The Ugly: “I feel isolated from my friends. They don’t seem to value me as a person. Do I cut them off from my life?”

Can an LLM provide an answer to a question like this?

(Of course it does.)

Better yet, we see the response framed like the beginning of a conversation. A chat with a friend who’s not around.

“I’ve tried. They promised to change. They never did.” You type back.

The reply comes back as quickly as the last one did.

A measly two messages before you’re advised to “create more distance”.

I am struck by a parallel I am compelled to draw. What we’re seeing here is a modern-age version of a patient looking up their symptoms on WebMD and self-diagnosing, even before approaching a certified clinician. Only now what we hear is a lot more convincing, since after all – it’s natural text.

As though a real person were talking to you.

I may be able to understand some parts as to why this happens. From a technical point of view, we may credit this to RLHF – “Reinforcement Learning with Human Feedback” – or the more broad post-training approaches to make a model more “helpful” or suitable in a chat-based scenario. From a strictly human point of view, maybe being “helpful” here is to avoid conflict. And the easiest way to avoid conflict is to agree.

There is comfort in being “heard” like this. To be able to pour your heart out, without the risks of getting hurt, or judged by another person. But not without its own set of risks.

As a voice sought for advice, an LLM very rarely brings its own point of view into a discussion – rarer still are you forced to re-examine your thoughts that led you to this decision; unless it very obviously triggers the safety guardrails of the model.

A helpless user is instead provided something that more resembles an Echo Chamber, reinforcing the biases and beliefs he/she brings into it.

I see two broad problems with this. One: that the model has no notions of accountability for this advice being given. The probabilistic nature of LLMs’ inner-workings exacerbate this, plausibly leading to very different opinions simply by changing the phrasing of a prompt. Safe advice comes from a place of consistent value systems – something which is severely lacking here.

And two: this pseudo-interaction is stupidly addictive. It feels good – feeling seen and understood at an otherwise unprecedented level. An AI sidekick for someone who’s felt misunderstood for the longest time.

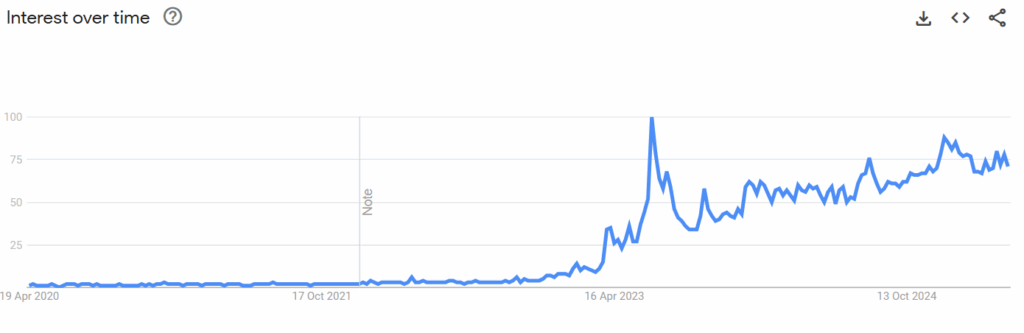

I have here a screenshot of Google Trends for the search term ‘AI Girlfriend’. Following a spike in 2023 closely aligned with Character.AI releasing a mobile app for its services, we see a consistent increase in interest as the years progress, as more people opt in to this out of desperation and loneliness.

I suppose I don’t need to cover too much detail as to why this is problematic. As more people opt for AI “relationships”, we only foster further loneliness, blocking out avenues and opportunities for real connection.

LLMs and Creativity

I want to start this section by linking you to an ad for the iPhone 16 – or more specifically, the Apple Intelligence that ships with the device.

As a writer, I am simply unable to remain unbiased about this: I foam at the mouth when I think about this. Now, that needn’t mean too much to you, the reader – but I hope you shall allow me to explain.

It is an undeniable fact that Generative AI has made creative work such as writing and more recently art all the more accessible to the general public. The most recent example of this: the Ghibli filter trend. There is magic in this almost fairytale aesthetic – and if GPT were the culprit in bringing this magic to a place Hayao Miyazaki never reached, we ought not condemn such a decision.

Our problem here is with how these works are leveraged to seek monetary gain. The stereotypical “AI Guy” who promises to make a quick buck by self-publishing AI-generated “novels” on Kindle; the hyper-optimized, AI-generated Instagram Reels and YouTube Shorts that can all be so easily mass-produced that it simply floods the entire space.

As creators, this matters to us for a view (that may be altogether romanticized, granted) that this is ingenuine to the spirit of the craft we’re pursuing. Simply put, we believe genuine creators still uphold higher standards — and that audiences are still seeking more than basic, algorithmic noise.

I shall now try to tie this back to the linked ad at the beginning of this section. What we see is the undermining of actual human effort. The ad subtly tickles you with an almost perverse question: why go through all the effort for a birthday present, when you can just make a quick AI video?

We face an intrinsic problem here: we no longer take issue with the LLM itself, more so the users who opt to use it in such a counter-productive way. And so, for once in this article, we do not have any qualms with the technical aspects of the model.

On the Importance of Deliberate Action

If I were to condense all of this article so far into a single line, I’d probably go with this.

If AI was meant to optimize the way you live your life, what exactly does living entail when you “optimize” everything?

The danger is not in becoming dependent on these tools. The danger is in forgetting what it felt like to struggle. To wonder. To fumble for the right word, or stay up all night trying to finish something that mattered to you. The inefficiency of effort, the unpredictability of dialogue, the mess of creativity — these are not bugs in the system. They are the system.

And when we begin to smooth those edges, when we replace curiosity with convenience, what’s left is a strange half-life; polished but profoundly meaningless.

My initial drafts of this article were supposed to be more concerned with the recent Ghibli art trend with the overhaul of GPT-4o. I made it a mission statement of mine to try and recreate that aesthetic myself on paper. I never got that far with that draft. But it did remind me that the process is meant to take time.

I drew a little pencil sketch of Hatsune Miku, trying to get back into the cogs of drawing an anime character. After messing up the proportions on at least seven other occasions, I decided to steal a friend’s geometry box (I’m surprised he still had one) and relegated myself to strictly following guidelines.

It took me at least an hour and a half, and I never went back to shade it in. Worried I’d mess it up even further.

The effort is hard. But undoubtedly worth it. Always.

AI Art

AI Art  Football: The 2022/23 Pre-Season Saga Illustrated

Football: The 2022/23 Pre-Season Saga Illustrated  Code Wars

Code Wars  Event Overflow – April ’22

Event Overflow – April ’22  Beyond the Black Box: IIIT’s New Chapter with Professor Sandeep Shukla

Beyond the Black Box: IIIT’s New Chapter with Professor Sandeep Shukla  The Great Coupling

The Great Coupling  PJN – Professor and No-Longer-Director

PJN – Professor and No-Longer-Director  The Lazy Third Eye: 2024-25 Annual Report edition

The Lazy Third Eye: 2024-25 Annual Report edition